Tools:Kinect skeletons streamer

Contents

log

04/12/2017

First test of the installation at beursschouwburg: streamer are streaming correctly, and after a correction on the viewer to detect "NaN" in the information received from the streamer, it has been possible to calibrate the 3 kinects quiet easily. Calibration is not perfect (based on skeletons, it would be a miracle), but is a good approximation of the position.

27/11/2017

panel to edit filtering configuration of the skeletons, same logic as for calibration matrices:

- filtering is stored on the streamer side,

- when the kinect appears in the viewer, the viewer requests the configuration

- streamer sends the configuration to the viewer

- configuration UI is created on the viewer side

- at each modification of the configuration, viewer sends it to the streamer

- the streamer applies it directly and updates the configuration file

26/11/2017

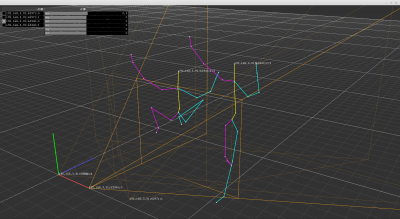

calibration based on successive positions of skeleton

The calibration algorithm is using 2 kinects. One is the reference, the other the calibrated. To start the process, one and only one skeleton must be present in each kinect (the same person, you got it). 500 positions of the hip center are stored (+/- 16sec). Once all the positions are collected, the calibration can be processed.

It uses 3 passes:

- first pass: rendering the Z rotation to apply on the positions to calibrate. As Z is pointing up, this rotation is the yaw[1].

- second pass: rendering the X rotation to apply on the positions once Z correction applied. As X is pointing right, this rotation is the pitch[1].

- last pass consists in processing the average distance between reference and the positions to calibrate.

This transformations are stored in a matrix ready to be sent to streamers.

Why not compute the roll[1]? I tried, but the poor quality of the recording was making the calibration worse than without. So I decided to drop this rotation.

An interesting subtlety is the way the Z and after it the X axis are processed:

- getting the normalised direction between pairs of reference points

- getting the normalised direction between pairs of points to calibrate

- projecting the 2 directions on the XY plane, by using 2d vectors

- accumulating the rotation between them

- averaging -> Z axis is computed -> calibration_matrix

- but, before going further, directions must be rotated by the Z rotation

- calibration_matrix.inverse * directions * calibration_matrix

- and we can repeat the operation for the X axis by using a projection of the direction on the plane YZ

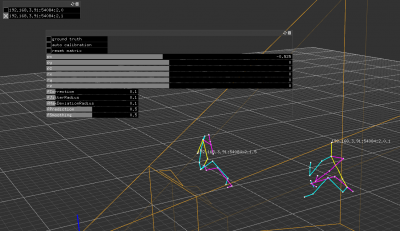

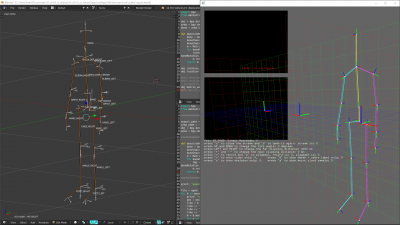

Image legend:

- white points: reference, or ground truth

- yellow points: points to be calibrated

- cyan points not linked together: calibrated points rotated, before translation

- cyan points linked: calibrated points after full matrix correction

there is also a 2d projection of the trajectories on the ground, for better readability.

The process research has been fast thanks to raw. In the viewer application, a raw "writer" have been added, witch stores each new pair positions while the calibration data retrieval. To develop the algorithm, I started a new application that loads these positions via a raw "reader". It has been easy to develop the algo on stored data instead of having to stops the viewer, change the algo, restart the app, wait for the kinect to connect, set the roles and moves in front of the kinects to generate the data.

See SkCalib folder in src for the code.

25/11/2017

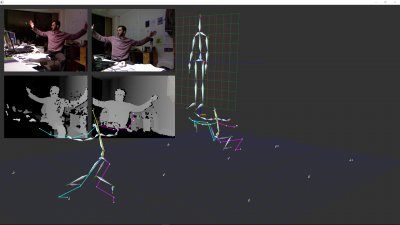

Working on a visualing / calibrating software, called NetworkViewer in the repo. A first attempt of "auto calibration" is not giving too bad results. The orientation are not processed yet, I will work on this tomorrow.

This software is a visualiser of streams. It displays messages processed by the "streamer", the software mapping kinect skeletons on an avatar (see http://vimeo.com/242486050). In this example, 2 kinects are largely overlapping each other. A first attempt to calibrate them, based only on positions, is not giving too bad results.

24/11/2017

back to my desk! La connection de 2 kinects simultanément est concluant:

Le travail sur un soft de calibration des différents points de vue est commencé:

13/11/2017

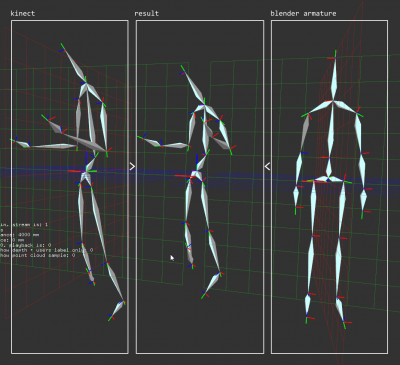

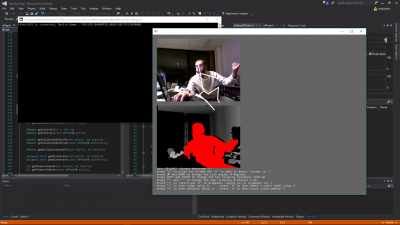

premiers essais de mapping vers divers squelettes - les hanches et le "spine" posent problèmes dans la plupart des modèles...

12/11/2017

le calcul des orientations relatives à envoyer à blender a été tout aussi fastidieux que le reste, mais moins long. Pour ceux qui aiment la magie noire, il a fallu environ 5 heures de prises de tête pour écrire ces 2 lignes de code.

if (parent != NUI_SKELETON_POSITION_COUNT) {

m = absolute_matrices[i] *

absolute_matricesi[parent] *

model.delta_matricesi[i];

}

else {

m = absolute_matrices[i] * model.absolute_matricesi[i];

}

Le reste des modifications est de l'ordre de l'optimisation et de la dataviz, comme la grille de 6 squelettes sous le modèle.

10/11/2017

l'alignement du squelette kinect et de l'armature exportée depuis blender est maintenant automatique, plus besoin de tuner les matrices à la main

08/11/2017

importation des matrices des os de l'armature blender dans l'application. Les orientations ne sont plus calculées par blender, ce qui posait de gros problèmespour le spin (rotations autour de l'axe alignés avec la direction de l'os).

05/11/2017

première réussite: les orientations relatives sont calculées correctement et envoyées à blender via OSC[2]

23/10/2017

code archeology, re-enabling ofxKinectNui[3]

resources

- ↑ 1.0 1.1 1.2 https://en.wikipedia.org/wiki/Flight_dynamics

- ↑ Open Sound Control, site officiel et wikipedia

- ↑ https://github.com/sadmb/ofxKinectNui